SLAM is an important technology relied on by mobile robots to explore unknown areas, and there are two main types of mainstream SLAM methods: vision and laser. Localization techniques via visual features are strongly influenced by lighting and camera movement speed, and mobile robots tend to lose visual feature tracking when moving fast or in scenes with poor lighting conditions (e.g., coal mine tunnels). In particular, in the coal mine tunnel environment, the ground is often uneven, resulting in very bumpy robot movements, which, combined with conditions such as uneven lighting, makes it difficult for mobile robots to achieve accurate autonomous localization and map construction in the coal mine tunnel environment.

To solve the problem of localization and map building in an environment similar to underground tunnels in coal mines, Daixian Zhu's team at Xi'an University of Science and Technology improved a localization and map building algorithm based on monocular cameras and IMUs. They designed a feature matching method combining point and line features to improve the reliability of the algorithm in harsh scenes and scenes with insufficient illumination; a tight-coupling method was used to establish visual feature constraints and IMU pre-integration constraints; and a key-frame nonlinear optimization algorithm based on sliding windows was used to complete the state estimation.

The block diagram of the system is as follows, which is mainly divided into three parts: front-end, system initialization and back-end.

System Block Diagram

Based on the above approach, the team conducted experiments in different environments.

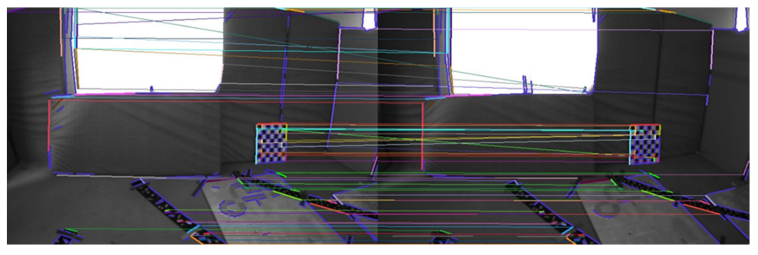

Two consecutive frames with large exposure differences were selected to evaluate the reliability of the front-end feature extraction and matching algorithms under conditions of drastic changes in illumination environment and a small amount of image texture.

V1_03 Feature point extraction and matching results

The experimental statistics show that the correct rate of feature point matching is significantly lower than feature line matching, and the extraction and matching of feature lines are less affected by lighting changes and have higher reliability under different lighting conditions. Therefore, the use of feature points combined with feature lines in vision can avoid the loss of features in scenes with drastic changes of lighting environment, thus improving the reliability of the algorithm.

Mileage accuracy assessment:The absolute error of the APE trajectory is shown in color in the figure below. The trajectories calculated by the team are closer to the true trajectories in the dataset, but the cumulative error increases with distance. Looking at the effect of trajectory calculation for each subsequence, the cumulative error is smaller (fewer red trajectories) in the test scenes with simple textures, better lighting conditions, and slower motion, indicating that the robot movement speed and lighting conditions have a greater impact on the accuracy of the algorithm.

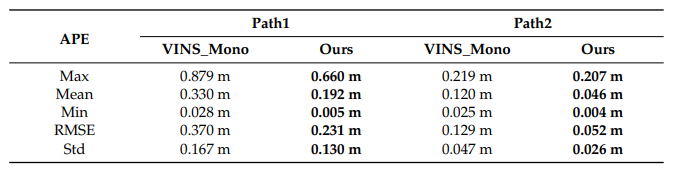

Based on the trajectory tracking results on two different paths, the root mean square error of the trajectory tracking algorithm designed by Daixian Zhu's team is 0.231 m and 0.052 m, which is better than that of VINS-Mono at 0.37 m and 0.129 m, respectively.

Real path vs. trajectory error between two algorithms

Error statistics between the two algorithms

Based on simulated data and experimental validation in a live environment, the team's proposed new algorithm improves the accuracy of mapping and composition of mobile robots in a coal mine tunnel environment.

Original paper: https://www.mdpi.com/1424-8220/22/19/7437