A box of drugs urgently needed by the user is delivered by drones to the community, a key to place an order for 10 minutes of hot takeaway along with drones to the doorstep ...... This is not a sight only in science fiction works, but a scene that has been realized. However, in actual application, drone delivery also has problems such as inaccurate predicted displacement and excessive landing deviation.

Li Daochun's research team from Beijing University of Aeronautics and Astronautics has proposed an autonomous landing scheme for UAVs based on multi-sensor fusion. The scheme consists of a fused sensor system: consisting of UWB (ultra-wideband), IMU (inertial measurement unit) and vision sensors to guide the UAV to approach and land on moving objects.

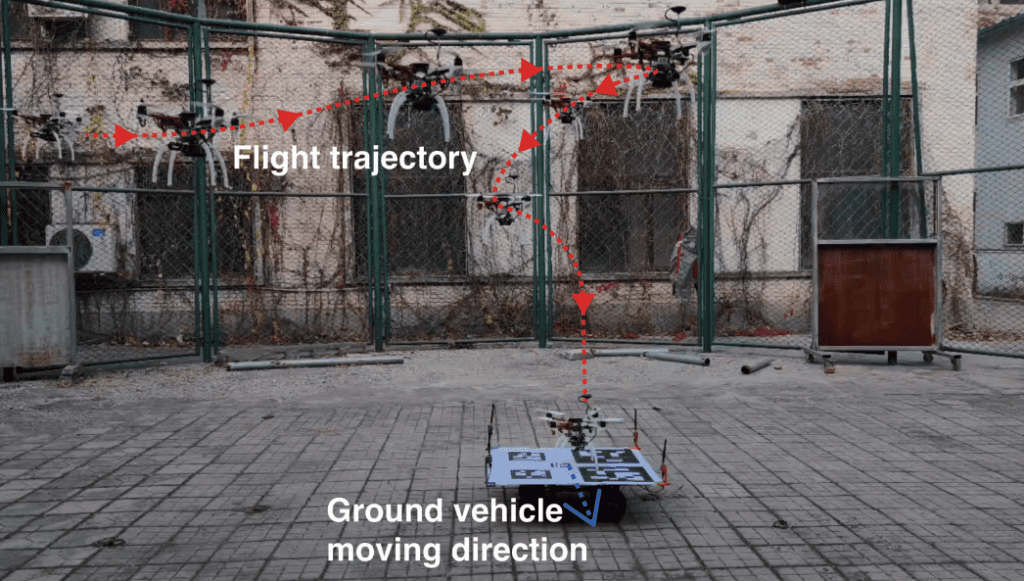

The workflow of the proposed autonomous UAV landing scheme is divided into two phases: the approach phase and the landing phase. In the approach phase, the UAV is far away from the landing platform and cannot acquire accurate visual information for attitude estimation, so the UAV mainly relies on the UWB-IMU fusion positioning system to obtain the relative position to the ground moving platform. When the UAV approaches the landing platform and captures the visual information, the UAV enters the landing phase based on the visual information and the data provided by the UWB-IMU positioning system.

To accurately assess the relative orientation of the UAV, the team designed a new landmark consisting of five separate ArUco markers of different sizes, as shown in Figure 1, such that it can be used for relative attitude estimation of the UAV and effective identification at different altitudes. When the entire landmark is within the field of view of the camera, four external markers are used for relative attitude estimation. As the UAV approaches the landmark, the outer markers are not visible in the camera's field of view, while the middle marker can continue to guide the UAV down and land on the moving platform.

To verify the feasibility of the solution, the team conducted simulations on a computer and then conducted experiments in the real world. The results showed that the scheme could allow drones to land successfully and autonomously with sufficient accuracy in most common scenarios.

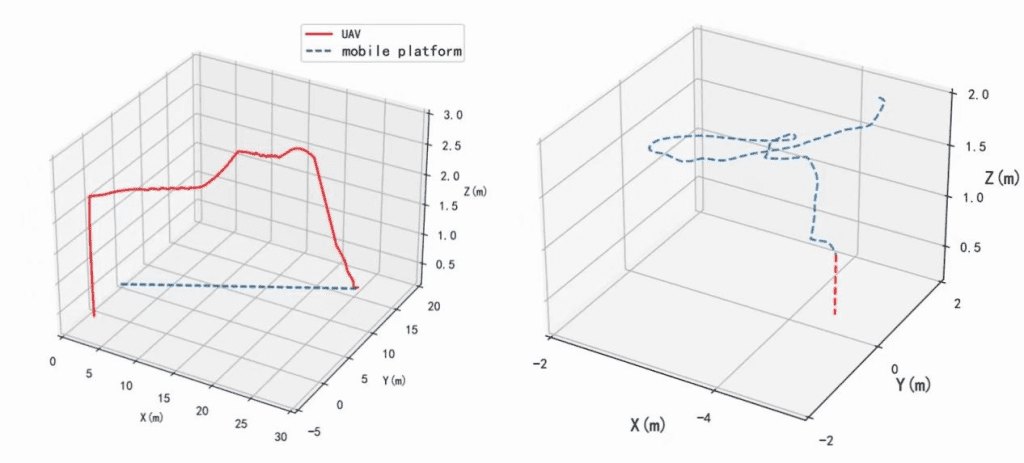

The landing trajectory of the autonomous landing simulation test in the Gazebo simulator is shown in the left panel of Figure 1. It can be seen that the UAV eliminates the position error in a very short time, continuously tracks the moving platform and successfully descends.

The trajectory of the drone in the outdoor real-world experiment is shown in the right panel of Fig. 1. The blue dashed line indicates the flight trajectory of the drone guided by the method in the scheme during landing, and the red line shows the drone reaching

The trajectory of gradually stopping the propeller and landing on the landing platform at a given threshold. The experimental results show that the UWB-IMU-Vision framework can guide the UAV to land on the desired platform and can complete the landing task autonomously with a landing error within 10 cm, showing good accuracy and reliability.

Technological development and business opportunities often become an unknown factor in overturning existing business ideas or business models, the current large-scale promotion of drones still has a great deal of difficulty in landing scenes, drone delivery is still mostly implemented in remote areas, want to be put into use within the city still need further opening of the policy and the solution of many technical problems.

[Dong, Xin, et al. "An Integrated UWB-IMU-Vision Framework for Autonomous Approaching and Landing of UAVs." Aerospace 9.12 (2022): 797.]