Wearable exoskeleton robots have become an emerging high-tech product that supports human movement and can provide necessary movement support in human rehabilitation training, daily activities and manufacturing tasks, while building a high-precision, low-latency human activity recognition system can help wearable robots quickly and accurately identify human activity and better assist humans in completing various tasks.

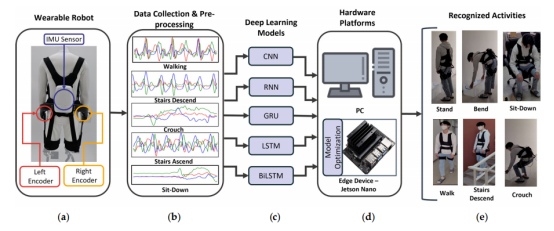

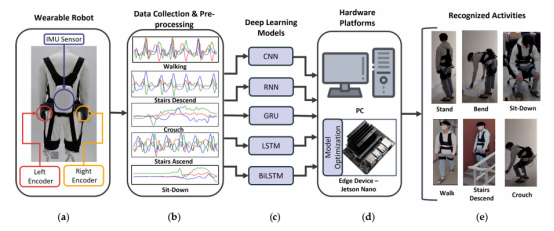

Tae-Seong Kim's team from South Korea used a wearable robot with an integrated IMU (inertial measurement unit) to test the feasibility of a wearable robot recognizing human activity in real time by embedding a lightweight deep learning model in its edge device.

Figure 1 Wearable robot and built human activity recognition system: (a) Wearable exoskeleton robot (b) Data collection and pre-processing

(c) Deep learning model (d) Hardware platform (e) Recognized activities

The wearable robot has two main sensor elements responsible for recording activity signals.

1. Two rotary encoders are located inside the actuator module and are responsible for measuring the hip joint angle.

2. A nine-axis IMU sensor, integrated in the robot's backpack.

Eight activities were considered for this experiment.

①Stand (Stand) ②Stand up (Stand up) ③Bend (Bend) ④Crouch (Crouch)

⑤Walk (Walk) ⑥Sit down (Sit down) ⑦Go upstairs (Stairs ascend) ⑧Go downstairs (Stairs descend)

Two datasets were collected.

1. ephemeral dataset: eight activities are repeated multiple times and the activity signals during each iteration are recorded. This dataset is segmented after preprocessing and amplification, and 80% is used as a training set and 20% as a test set for training, validating and optimizing deep learning models on PC and edge devices.

2. Continuous data set: multiple actions are performed sequentially. This dataset is used to test the feasibility of human activity recognition system on edge devices.

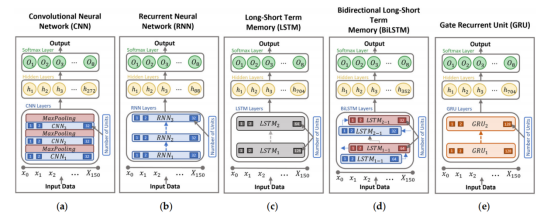

The team used five deep learning models for human activity recognition: CNN, RNN, LSTM, Bi-LSTM and GRU. the model structure is shown in the following figure.

Figure 2 Deep learning model structure: (a) CNN (b) RNN (c) LSTM (d) Bi-LSTM (e) GRU

The experimental results show that the system has an average recognition accuracy of 97.35% for eight movements, including standing, bending, squatting, walking, sitting, sitting up and going up and down stairs, with an inference time of less than 10ms.

The experiment validates the feasibility of using wearable robots for real-time human activity recognition, but there is an obvious drawback: misrecognition of transitional activities, a problem that could be addressed in the future by deploying more IMU sensors or modeling and training these activities in deep learning models, allowing wearable robots to better help workers lift heavy objects or in human rehabilitation processes more effective in helping patients with rehabilitation training.