Deep learning has been extensively explored in human behavior recognition tasks based on inertial measurement units (IMUs). Recent approaches typically use hybrid models consisting of convolutional neural networks (CNNs) and recurrent neural networks (RNNs) for multi-sensor fusion and contextual information association to achieve behavior recognition. However, these models often lack consideration of the physical characteristics of different sensors and the "forgetting defect" of RNNs, resulting in insufficient recognition performance.

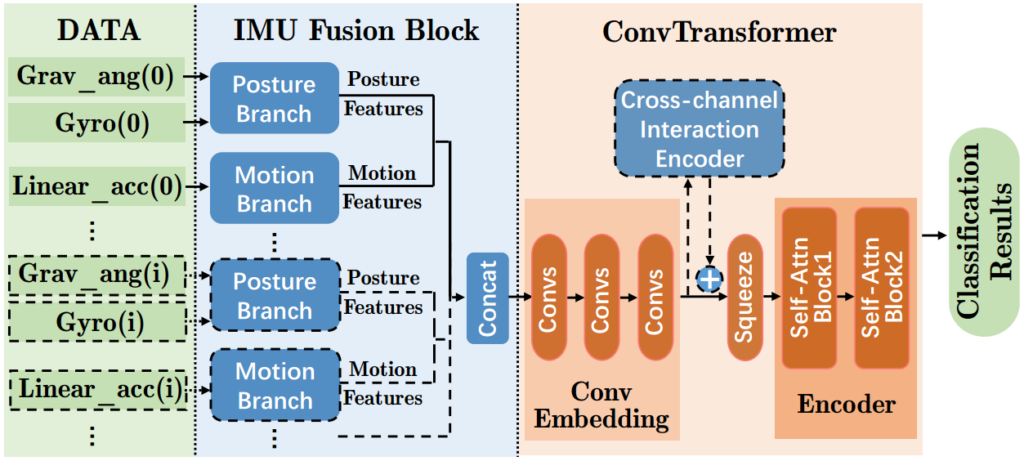

To this end, postdoc Ye Zhang from Sun Yat-sen University proposed a deep network framework IF-ConvTransformer for human behavior recognition, which consists of an IMU fusion module and a ConvTransformer sub-network. Among them, the IMU fusion module is inspired by complementary filtering techniques, which can adaptively adapt the data characteristics of gravimeter and gyroscope to effectively fuse different IMU sensors. In addition, the ConvTransformer sub-network can better capture local and global temporal features through convolutional and self-attentive layers to effectively build contextual associations. The method is experimentally validated using five smartphone-based and three wearable device-based human behavior recognition datasets, and the experimental results show that the method outperforms the benchmark method by an average of 2.88% in W-F1 metrics. in addition, the method also provides some interesting visualization experiments to further demonstrate its effectiveness. The related code is open sourced at: https://github.com/crocodilegogogo/If-ConvTransformer.